The World Economic Forum’s New AI and Children Toolkit

April 25, 2022

Last month, the World Economic Forum (WEF) unveiled its much-awaited Artificial Intelligence for Children toolkit.

The non-binding document has two main goals. On the one hand, to support companies and tech innovators in developing ethical and trustworthy AI for children. On the other, to educate parents and guardians on how to buy and use AI products that respect kids’ safety, data privacy, and developmental needs.

Here are the key takeaways from the toolkit — and what they might mean for the future of artificial intelligence and speech recognition.

Why Do We Need a Toolkit on AI for Children?

For the first time, an entire generation is growing up in a world where artificial intelligence is pervasive. Children start using AI-enabled products at an early age, from smart toys, video games, and social media to education technology and voice assistant solutions.

Increasingly, AI-powered algorithms determine what kids learn at school, what they watch online, and how they play and interact with peers. AI isn’t risk-free, which is why it’s important to balance the potential and functionality of AI against the need to protect children’s fundamental rights to safety and privacy.

Putting Children FIRST: The WEF’s 5 Principles for Ethical AI

In its toolkit, the WEF advocates for a “children and youth FIRST” approach to AI development. FIRST stands for:

- Fair

- Inclusive

- Responsible

- Safe

- Transparent

At the core of this approach is the idea that AI products for kids must be built specifically for them instead of adapting technology designed for adult users.

C-suite executives and product teams should put children’s needs first at every stage of the product life cycle — from design and development to deployment and beyond. The goal should be to maximize kids-specific benefits and mitigate negative consequences, even where that comes at the expense of the product’s functionality.

1. Fair

To guarantee fairness, companies must consider, and proactively address, ethical and liability concerns. This includes ensuring compliance with national and international regulations such as the EU’s General Data Protection Regulation (GDPR), the Children’s Online Privacy Protection Act (COPPA) in the U.S., and the UK’s Age Appropriate Design Code, as well as best practice guidelines like UNICEF’s Policy Guidance on AI for Children.

It’s also essential to carry out ongoing threat analysis to curb potential risks such as breaches of consent or emotional and developmental harm.

Crucially, companies must take steps to understand and minimize the inherent bias in their technologies. Bias should be assumed and actively addressed in the training, expression, and feedback of all AI models.

2. Inclusive

In this context, inclusivity means ensuring that AI-enabled products are accessible to kids of different ages, cultures, linguistic backgrounds, and developmental stages, including children with physical, mental, and learning disabilities.

When it comes to children, inclusivity is paramount. Adults can usually understand the reasons for being excluded from using a particular product — for instance, accessibility is expensive, or the technology wasn’t built for their specific use case. However, kids may not be able to do that, and feeling excluded could lead them to doubt their abilities, hurting their confidence and development.

Given its importance, accessibility shouldn’t be an afterthought but must be built-in from the start. To that end, companies should consult not just technology experts but also specialists in child development, child rights, learning sciences, and psychology. Development and testing cycles must also include feedback from parents, guardians, and children themselves.

3. Responsible

Responsible AI companies keep up with and incorporate the latest advancements in learning and child development science into their products. They design AI-powered tools specifically for the age and developmental stage of the intended end users rather than, as the WEF puts it, a nondescript consumer base of “small, silly adults.”

Creating responsible and age-appropriate products also involves analyzing the specific risks for target users. Kids may not fully understand the consequences of sharing data or interacting with strangers online, for instance. It’s therefore vital to ensure compliance with all relevant data protection and child safety regulations and to over communicate the privacy and security implications to kids and their parents, guardians, and teachers.

AI companies and tech experts should also keep in mind that they likely don’t have the knowledge or skills to adequately evaluate their products’ impact on children. It’s best to err on the side of caution and consult with legal and child development experts throughout the product life cycle.

4. Safe

This principle can also be expressed as “Do no harm.” Key concerns here include data privacy, cybersecurity, and addiction mitigation.

AI tools must have built-in protections for user and purchaser data, including comprehensive yet easy-to-understand disclosures on how data is collected, processed, and used. Users must also have the option to withdraw consent and have their information removed or erased at any time.

Companies should also conduct scenario planning and user research to anticipate malicious or unintended use cases and plan mitigation strategies. It’s also important to acknowledge the potential for overuse and proactively build addiction mitigation into products.

5. Transparent

Last but not least, companies need to ensure transparency. They must explain to users and buyers in non-technical language what artificial intelligence is, why it’s required in the product, and how it works.

Terms of use must be clear and accessible, explicitly disclosing:

- Potential risks

- The limitations of the product

- Use of high-risk technologies like emotion and facial recognition

- The age range, environments, and use cases for which the product is built

- Any data protection and privacy regulations the product complies with

AI solutions for kids should also come with the most secure settings as default and only allow parents or guardians to opt in to advanced features after reading and consenting to the terms of use. Alert mechanisms to prompt adult intervention if a potential threat is detected during usage should also be in place.

A New Labeling System for AI for Children

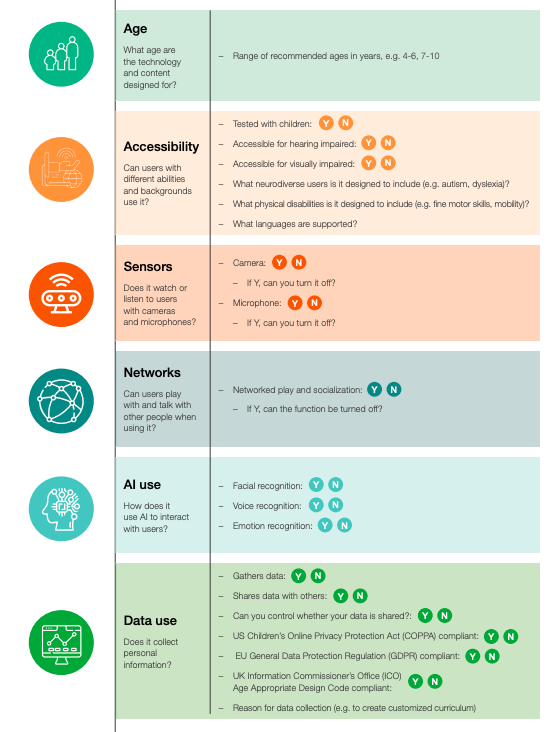

In addition to the kids-FIRST framework, WEF’s toolkit also proposes a new labeling system for all AI products. It will include information about each product’s:

- Age range

- Data use

- AI use

- Accessibility

- Sensor use (cameras and/or microphones)

- Networks (whether the product enables users to socialize with others)

The labeling system will work much like nutritional information on food products. The information will be accessible on the physical packaging and online via a QR code.

How Does Our Voice Technology Stack Up?

At SoapBox Labs, we welcome the WEF’s Artificial Intelligence for Children toolkit. Here’s how we espouse the WEF’s kids-FIRST approach to AI when it comes to our voice technology.

Fair

We’ve built our voice technology to ensure that it understands all kids’ voices equally and fairly. Our speech models have been trained on kids’ voice data from 193 countries, and our technology has been independently validated to show no bias across a wide range of accents and socioeconomic backgrounds.

Inclusive

At its core, speech recognition technology is an inclusive technology that allows kids of all ages and abilities to use their voices to independently command, control, and direct their digital experiences.

Thanks to voice technology, pre-literate kids can, for example, search through complex menus, converse with their favourite interactive TV characters, or immerse themselves in metaverse experiences without needing to press buttons, type out commands, or handle controllers.

Responsible

Unlike most other speech recognition systems, SoapBox’s voice technology was not built first for adults and does not treat kids as “small, silly adults.”

We started in 2013 by reimagining every step in the engineering and data modeling process, and the result is a world-class engine tailored specifically to kids’ voices, speech patterns, and unpredictable behaviors. Our voice technology also works for adults whom we think of as mature, articulate, and unusually well-behaved children!

Safe

Crucially, SoapBox is a privacy-first company. We’ve developed our technology using a privacy-by-design approach, which builds in data protection from the earliest stages of the product life cycle. Any data that’s retained in our system is only used to improve our models and never shared with third parties for marketing or advertising purposes.

SoapBox is a long-time advocate for the need to strengthen children’s data privacy and design bias out of tech. In 2019, SoapBox Labs filed a submission on Voice Prints and Children’s Rights to the Office of the UN High Commissioner for Human Rights explaining the potential of voice technology for kids but also the threats it poses to children’s data privacy if it’s not built with a kids-first approach.

Transparent

To be open and transparent in our commitment to protecting kids’ data privacy, we’ve partnered since 2013 with PRIVO, an independent safe harbour organization who regularly review our privacy-by-design processes and certify our GDPR and COPPA compliance.

SoapBox calls on other players in the voice technology industry to deliver similar levels of compliance and transparency when it comes to how their voice data is gathered, stored, and maintained.

Want to Learn More About Our Speech Recognition Engine?

Email us at hello@soapboxlabs.com to learn more about how our approach to building voice technology aligns with the WEF’s FIRST framework.

Ready to add voice technology to your products for kids? Complete our Get Started form and a member of our team will be in touch with next steps.